Visualize a benchmark#

After running a benchmark, benchopt automatically displays the results in an HTML dashboard. Let’s explore the dashboard features on the Lasso benchmark.

Hint

Head to Get started to first install benchopt and setup the Lasso benchmark.

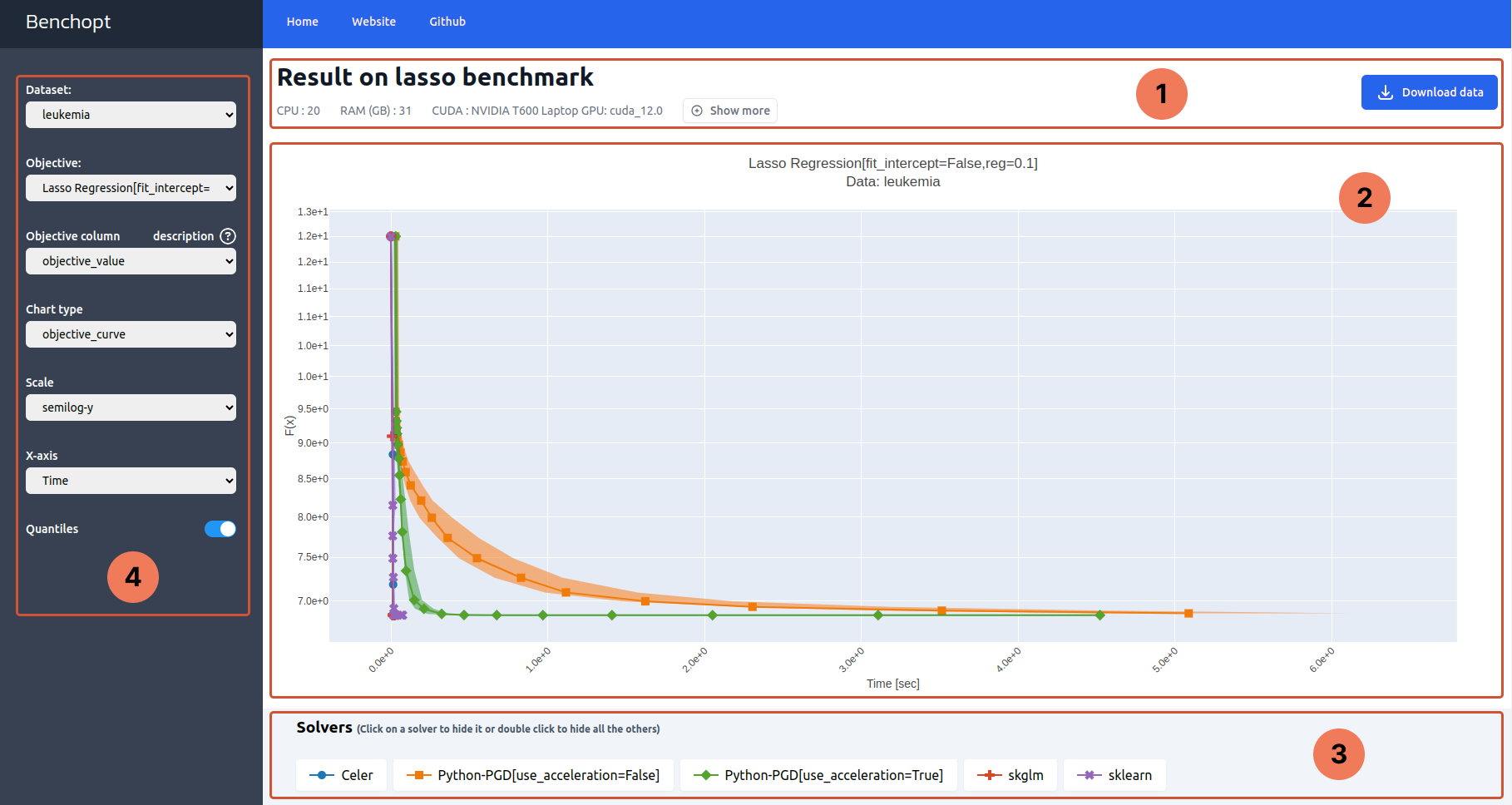

Part 1: Header#

Part 1 contains the benchmark metadata: the benchmark title and the specifications of the machine used to run it.

On the right hand side is a Download button to download the results of the benchmark as .parquet file.

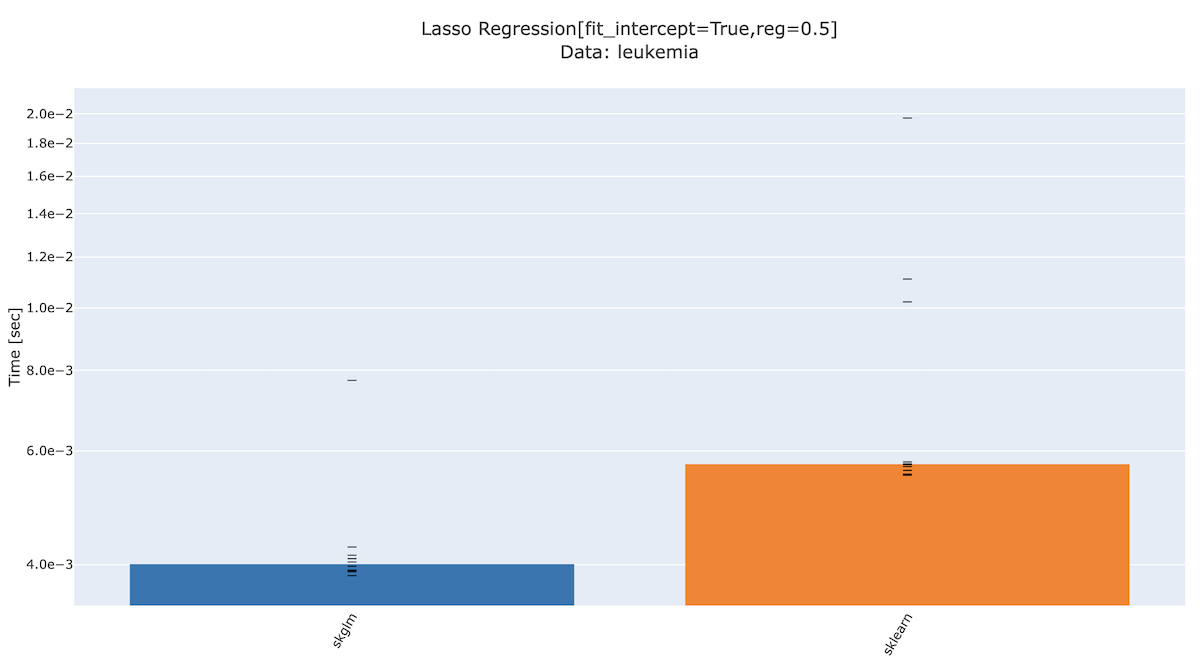

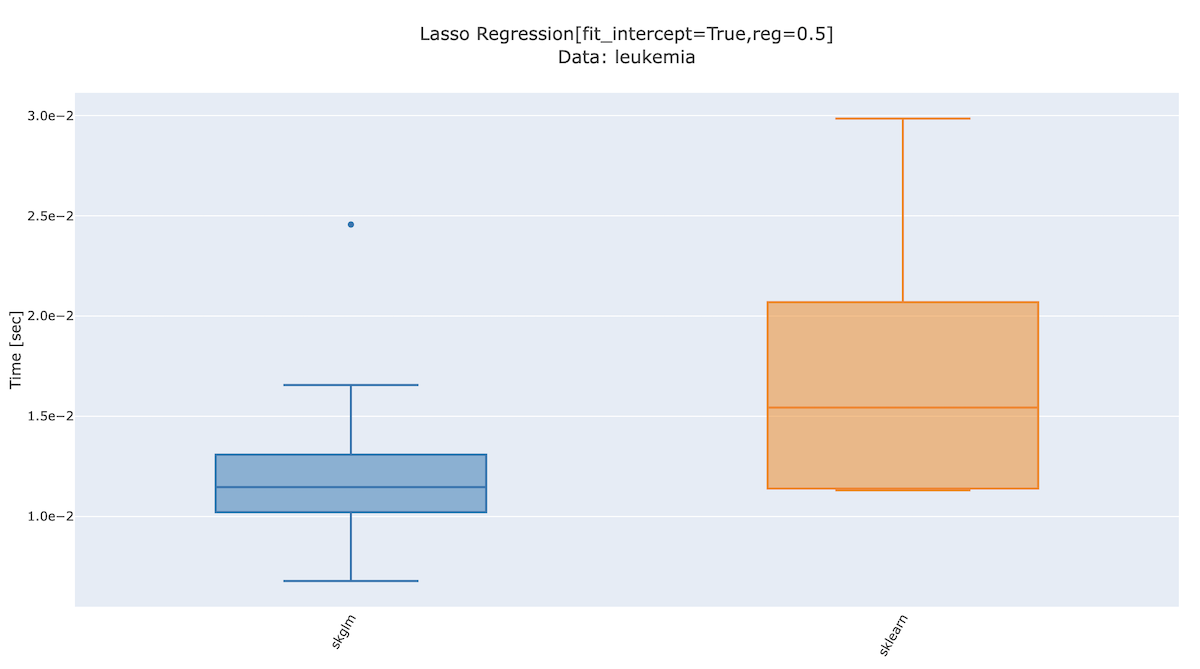

Part 2: Figure#

Part 2 is the main figure that plots the tracked metrics throughout the benchmark run. Its title shows the objective and the dataset names and their corresponding parameters that produced the plot.

Hovering over the figure will make a modebar appear in the right side. This can be used to interact with the figure, e.g. zoom in and out on particular regions of the plot.

Part 3: Legend#

The legend maps every curve to a solver.

Clicking on a legend item hides/shows its corresponding curve. Similarly, double-clicking on a legend item hides/shows all other curves. Finally, hovering over a legend item shows a tooltip with details about the solver.

Note

The displayed details about the solver are the content of the solver’s docstring, if present.